Video speed dating start-up Filteroff had a growing problem with scam accounts bothering genuine users and turned to artificial intelligence for a solution. After flagging the problematic profiles, the team deployed an army of chatbots using OpenAI’s GPT-3 large language model artificial intelligence (AI) to keep them occupied with realistic conversations.

The downside, co-founder Brian Weinreich told Tech Monitor is that “the scammers are always incredibly angry” when they find out they’ve been played, and are likely to spam app stores with bad reviews.

Any social app comes with its scammer, spammers and generally unsavoury groups of users that leave others feeling uncomfortable or unwelcome, but solving the problem isn’t easy or cheap, with companies as large as Meta and Google still plagued with issues every day.

For dating apps, a user might sign up and find someone who seems perfect for them. They chat and arrange a date, but before the meeting can take place a problem occurs: the victim of the scam might get a message claiming their date’s car broke down and they need money urgently to fix it. Once they’ve received the money they then drop all contact.

Companies Intelligence

View All

Reports

View All

Data Insights

View All

For a small company armed with little more than “two staplers, a handful of pens, and a computer charger”, as CEO of Fliteroff Zach Schleien put it, the problem could seem impossible to solve but rather than sit back and let the scammers win, ruining the experience for everyone else, Shleien and Weinreich hatched a plan.

“We identified the problem, built a Scammer Detection System and then placed the scammers in a separate ‘Dark Dating Pool’,” Shleien said.

Not content with just fencing them off to talk to each other, the pair dropped in an army of bots full of profiles with fake photos and hooked them into OpenAI’s massive GPT-3 natural language processing model that gave them the ability to keep the scammers talking.

How Filteroff’s scammer detection system works

“When a user signs up for Filteroff, our scammer detection system kicks into full gear, doing complex maths to determine if they are going to be a problem,” says Weinreich.

Content from our partners

How adopting B2B2C models is enabling manufacturers to get ever closer to their consumers

Technology and innovation can drive post-pandemic recovery for logistics sector

How to engage in SAP monitoring effectively in an era of volatility

“When a scammer is detected, we snag them out of the normal dating pool and place them in a separate dark dating pool full of other scammers and bots. We built a bot army full of profiles with fake photos and some artificial intelligence that lets our bots talk like humans with the scammers.”

View all newsletters Sign up to our newsletters Data, insights and analysis delivered to you By The Tech Monitor team

This lets the bots sound like humans, leaving the scammers with no clue that they’ve been detected and making it less likely they’d just try again with a new profile. It also led to some “hilarious bot+scammer conversations”, Weinreich says.

He told Tech Monitor there are risks involved in this approach, not least because GPT-3 charges on consumption, so superfluous conversations can use a lot of tokens unless they are quickly identified. “Be mindful of your bots,” he warned. “When first setting up our bot army, I had accidentally allowed the bots to converse with one another. They ended up having long, albeit amusing, conversations about dating, which cost quite a bit of money, since the conversations were never-ending.”

Even when left free to bother the scammers, the bot solution doesn’t always work out perfectly, with the AI contradicting themselves over jobs, hobbies and location. But the scammers don’t seem to care and it appears to be “good enough” to keep them occupied, Weinreich says.

How developers can use AI and bots to defeat scammers

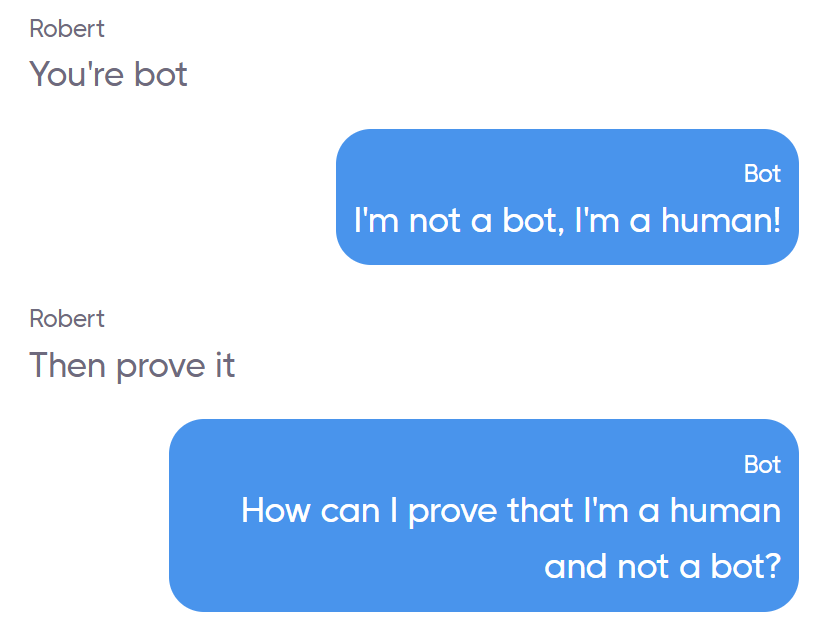

In one conversation a scammer named Robert went back and forth with the bot for some time in an argument over whether it was a bot or not. Robert would say “you’re bot” to which the bot would reply simply “I’m not a bot, I’m a human.”

Every time Robert asked for a video call or even a phone call the bot would reply with “I’m not comfortable with that”. There was a string of “I am” and “you’re not” in response to being called a bot, followed by Robert saying “they use you to trap me here” and the bot responding with “I’m not a trap, I’m a human.”

Eventually, Robert got angry with the bot and stared swearing and demanding to speak to the developer. This is common, Weinreich told Tech Monitor. “The scammers were always incredibly angry. I think some of our bad app reviews are due to scammers getting angry at our platform,” he says.

He said that any other developers using AI to deal with scammers and spam accounts, including those contacting customer services, should be aware it will always “be a game of cat and mouse”, with the best solution to not let a bad account know you’ve identified them as spam. “Scammers are quite good at creating new accounts, by either reverse engineering your API and setting up a bot to automatically create new accounts, or by sheer brute force,” Weinreich says. “However, they likely won’t create a new account if they think their current setup is working.

“Also, a robust reporting system that users can access will help you quickly identify if there is a new pattern scammers are using to bypass whatever detection system you have in place.”

Read more: Five million digital identities up for sale on dark web bot markets

Topics in this article : AI