The EU needs to do more to support the development of open-source AI in the EU AI Act, a group of leading developers and open-source companies has warned. GitHub, Hugging Face and the Creative Commons Foundation are among the signatories of an open letter to the European Parliament. However, one AI expert told Tech Monitor open source isn’t compatible with safe AI deployment as there is no easy way to take responsibility for misuse.

The open letter was sent to policymakers in the EU and lists a series of suggestions for the European Parliament to consider when drafting the final version of the AI Act. This includes clearer definitions of AI components, clarifying that hobbyists and researchers working on open-source models don’t commercially benefit from their code, and allowing real-world testing of AI projects.

Governments around the world are wrestling with the best way to tackle AI safety and regulation. Companies like OpenAI and Google are forming alliances to drive safety research for future models and the UK is pushing for a global approach. The EU has one of the most prescriptive approaches to AI regulation and will have the first comprehensive law.

A research paper on the regulation of AI models has been published alongside the open letter. It argues that the EU AI Act will set a global precedent in the regulation of AI and supporting the open-source ecosystem in regulation will help further the goal of managing risk while encouraging innovation.

The act has been designed to be risk-based, taking a different approach to regulation for different types of AI. The companies argue that the rules around open source, and details of exemptions for non-commercial projects are not clear enough. They also suggest that rules around impact assessments, involving third-party auditors, are too burdensome for a usually not-for-profit project.

EU AI act could be the ‘death of open source’

Ryan Carrier, CEO of AI certification body ForHumanity, told Tech Monitor that the EU AI Act is the “death of open source”, describing the approach to technology development as an “excuse to take no responsibility and no accountability”. Carrier says: “As organisations have a duty of compliance, they can no longer rely upon tools that cannot provide sufficient representations, warranties, and indemnifications on the upstream tool.”

He said this means that someone will have to govern, evaluate and stand behind any open-source product for it to be used in production and be compliant with the act. “Open source is useful as a creative process, but it will have to morph with governance, oversight and accountability to survive,” he says. Carrier believes that “if an open source community can sort out the collections of all compliance requirements and identify a process for absorbing accountability and upstream liability, then they could continue.”

In contrast, Adam Leon Smith, chair of BCS F-TAG group, welcomed the calls for greater acceptance of open-source AI. “Much of what we need from AI technology providers to ensure AI is used safely is transparency, which is a concept built into open source culture,” Leon Smith says. “Regulation should focus on the use of AI not the creation of it. As such, regulators should be careful to minimise constraints on open source.”

Content from our partners

How tech teams are driving the sustainability agenda across the public sector

Finding value in the hybrid cloud

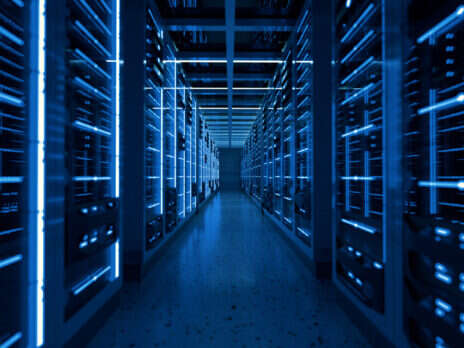

Optimising business value through data centre operations

Amanda Brock, CEO of UK open source body OpenUK, told Tech Monitor the top-down approach of the EU AI Act makes it very difficult for anyone but the biggest companies to understand and comply with the legislation. She agreed that codes of conduct and acceptable use policies are incompatible with open-source software licensing. This is because it by default allows anyone to use the code for any purpose.

View all newsletters Sign up to our newsletters Data, insights and analysis delivered to you By The Tech Monitor team

AI development ‘at a crossroads’

A solution could be a further shift towards the approach used by Meta in licencing its Llama 2 large language model. It isn’t available under a true open-source licence as there are some restrictions on how it can be shared and who can use it, including paid licencing for very high-end use cases. This, says Brock “means that we are in an evolving space and the Commission’s approach to legislation will not work.”

Brock also agreed there is a need to change how open-source projects are handled and classified in the EU AI Act before it gets final approval. “The default is that organisations will be treated as commercial enterprises which are required to meet certain compliance standards under the act with respect to open-source software,” she explained.

Some organisations are exempt if they meet the criteria and comply with certain aspects of good practice. But, says Brock, there are concerns the criteria for what is exempted are not wide enough to consider the full spectrum of open-source projects. This will lead to those working on specific products caught under the act and unable to comply.

Victor Botev, CTO and co-founder of open research platform Iris.ai, says Europe has some of the best open source credentials in the world and EU regulators need to take steps to keep the sector operational. “With companies like Meta releasing commercial open source licenses for AI models like LLaMA 2, even US industry giants have pivoted to harness the power of the open-source community.”

“We are at a crossroads in AI development,” Botev says. “One way points towards closed models and black box development. Another road leads to an ascendant European tech scene, with the open source movement iterating and experimenting with AI far faster and more effectively than any one company – no matter how large – can do alone.”

Botev believes achieving the open model requires greater awareness of the benefits and how AI systems actually operate among the wider population. This, he says, will spawn more fruitful discussions on how to regulate them without resorting to hyperbolic dialogue. “In this sense, the community itself can act as an ally to regulators, ensuring that we have the right regulations and that they’re actionable, sensible and fair to all,” he explains.

Read more: Frontier Model Forum: OpenAI and partners launch AI safety group

Topics in this article : AI , EU AI Act